As a front end web developer and filmmaking aficionado, Héctor Monerris is always thinking about the way to mix both worlds. He's written us a case study about WebGL realtime rendering onto a Video, looking into a little experiment he made using Three.js and video:

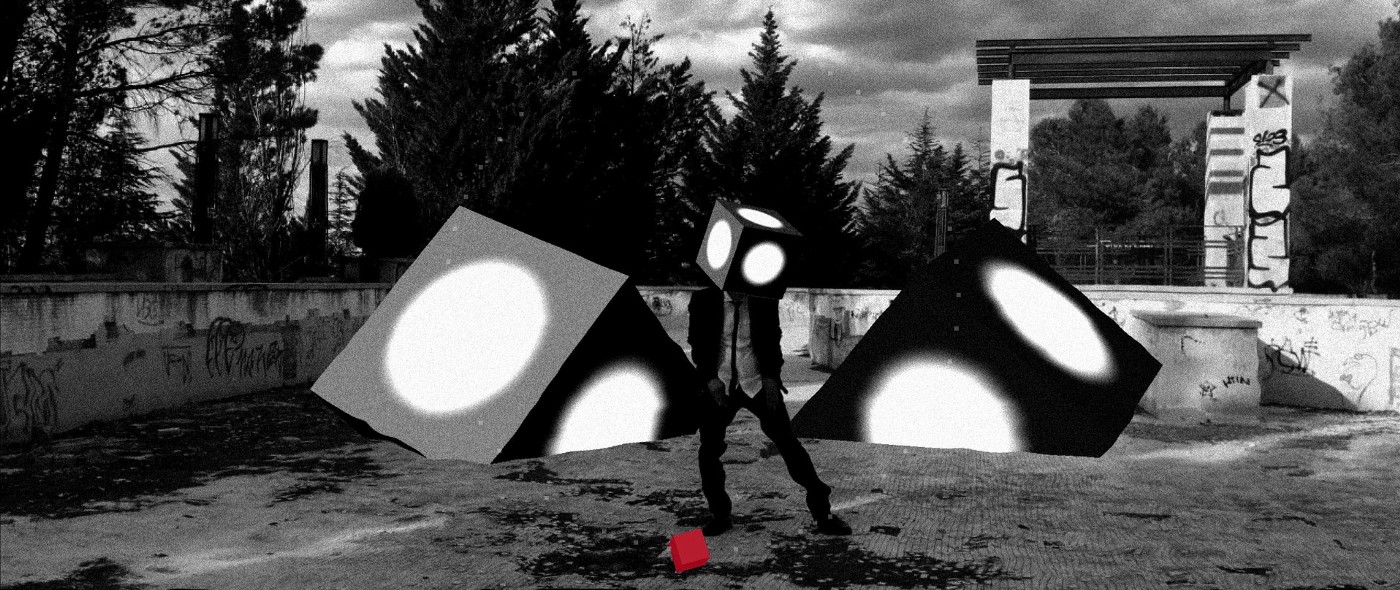

THE BOXES by Nerris - Three.js, video and tracking data little experiment

I spent some time trying to integrate video, masks and tracking data into Three.js. This is what I got.

This experiment:

- Renders a Three.js 3D scene on top of a video.

- Masks the character (my father) and fakes z-depth to let 3D objects move behind him.

- Uses shaders for post processing.

- Is frame accurate.

The tracking data

3D Tracking tools 'recreate' camera's and object's motion 3D data from a video source. Adobe After Effects includes a 3D solver, but it is very limited, and only for camera solving. There are other tools out there, but I chose SynthEyes. SynthEyes has a steep learning curve, because the GUI is a complete mess. But when you start knowing what you are doing, it is like entering the Matrix. It is powerful, incredibly fast, and... cheap.

From shooting some testing videos to try SynthEyes, and I realized that:

- 3D Tracking is a profession. Do not expect to master the technique in one afternoon. I spent a whole week trying and I just scratched the surface.

- All the 'AUTO' buttons work as expected: They do nothing.

- Long shots are hard to work with (20 seconds or more).

- For camera solving, wide shots are better because there is more information (sky, floor and sides).

- Avoid wind. Moving trees and fast clouds are not good tracking information. Also heavy light changes (Eg: The Sun hiding behind a cloud).

- Fast camera movements, 180º+ rotations or motion blur, are bad candidates for automatic camera tracking.

- If the walls or floor are big smooth surfaces, you will need to add marks.

- Beware of the noise. It tricks the automatic tracker.

- Objects occupying a large area of the frame are easier to track. Smaller ones, may need frame by frame manual adjustments.

- High contrast footage is always better.

My final shot is a paced camera movement, and shows a 'big head' with clear corners. Also the floor is full of details to make the camera solving easy. It was shot with an iPhone 6S in 4K. It is a low-fi example of 'best scenario' for tracking.

After solving the camera and the 'head' with SynthEyes, I exported the 3D data to After Effects because I know After Effects better than SynthEyes. Then, I created a small After Effects JSX script to save the keyframes info as a JSON file.

TIP: If you do not want to write an After Effects JSX, you can copy the keyframes into the clipboard, and paste it into a text editor. You will get human readable coordinates.

After Effects has a different coordinate system than Three.js. And all the coordinates need to be converted. You can use these simple formulas:

function aePositionToThree( x, y, z ) {

return new THREE.Vector3( x, -y, -z );

}

function aeRotationToThree( x, y, z) {

return new THREE.Euler(

THREE.Math.degToRad(x),

THREE.Math.degToRad(-y),

THREE.Math.degToRad(-z)

);

}

And this is how to setup a Three.js Camera from an After Effects Camera settings:

//From After Effects Camera settings let aeFilmWidth = 33; let aeFocalLength = 36; camera.aspect = window.innerWidth / window.innerHeight; camera.filmGauge = aeFilmWidth; camera.setFocalLength( aeFocalLength ); camera.updateProjectionMatrix();

HEY! These parameters need to be updated on every window or area resize.

Binary frame number bar

Have you ever tried to sync an HTML5 video element with something else? Yes? Then you already know that video.currentTime property is not accurate. There is a big chance to have up to 500ms delay. For some uses, this is ok. Definitely not for this experiment, because we need it to be in perfect sync with the tracking data.

Inspired by 'Teletext', I decided to add some lines of pixels at the bottom of the video. These pixels contain the frame number, encoded into black and white (binary) stripes.

I wrote this Processing script that generates a PNG sequence with the stripes for 1.000 frames:

int width = 1280;

int height = 720;

int frame = 0;

int digits = 20;

int total = 1000;

int filePadding = 5;

void setup() {

size( 1280, 720 );

stroke( 25 5);

}

void draw() {

background( 0, 0, 0 );

String b = binary( frame, digits );

int space = width / digits;

for ( int i = 0; i < digits; ++i ) {

fill( b.charAt(i) == '1' ? 255 : 0 );

rect( i * space, 0, space, height );

}

String id = nf( frame, filePadding );

fill( 100 );

textSize( 3 2);

text( id, 10, height / 2 );

//A directory 'frames' is needed

saveFrame( "frames/btc_" + id + ".png" );

++frame;

if ( frame > total ) noLoop();

}

I am new to Processing, so it is not optimized. But it did a good job!

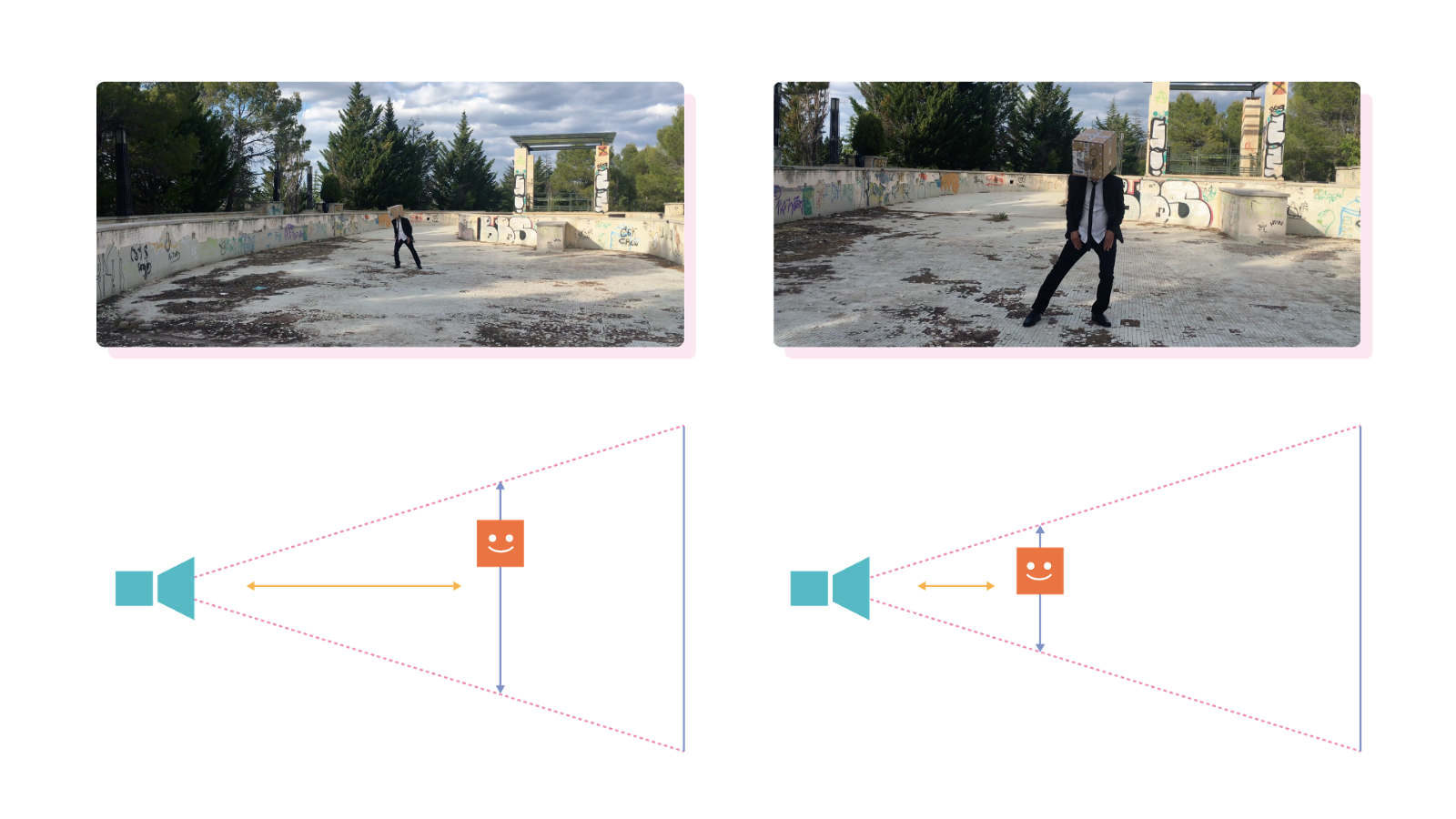

Faking Z-Depth

In the experiment, you will see 3D elements moving behind my father. I got that effect by creating an additional 3D plane textured with the video masked. That mask was generated using regular After Effects tools. This is the final layout for the video.

This additional 3D plane, was animated in Z axis using the position of the head. The plane scales based on the Z distance to the camera. Yeah, basic trigonometry:

So... what?

I know: everybody is freaking out about augmented reality, and this experiment is something minor in comparison. But I just wanted to explore the narrative possibilities of interactive video in HTML5, and this experiment looks like a good starting point. The next step is to make things 'clickable' and see where it goes.

During this project, I had the feeling that I was reinventing the wheel for the most part of the time. So, If you have other ideas or different approaches... I am all ears.

Thanks for reading!