Every year, 2-3 out of every 1,000 children born in the US are deaf or hard-of-hearing. 90% of these children are born to hearing parents, and in many cases, their child is the first deaf person these parents have ever encountered.

Without being introduced to sign language at an early stage, a deaf child may miss out on learning language. This can lead to language delay or deprivation, which has long-term negative impacts on a child’s life. That is why it is so important that parents of deaf children have the opportunity to learn American Sign Language (ASL) as soon as possible.

To address language deprivation and help bridge the communication barrier between the deaf and the hearing, creative studio Hello Monday partnered with the American Society for Deaf Children to create Fingerspelling.xyz. Fingerspelling is an essential part of ASL, the primary language of the Deaf community. It is often used for proper nouns or to sign a word you don’t know the sign for.

What is it:

Fingerspelling.xyz is a browser-based app that uses a webcam and machine learning to analyze your hand shapes so you can learn to sign the ASL alphabet correctly.

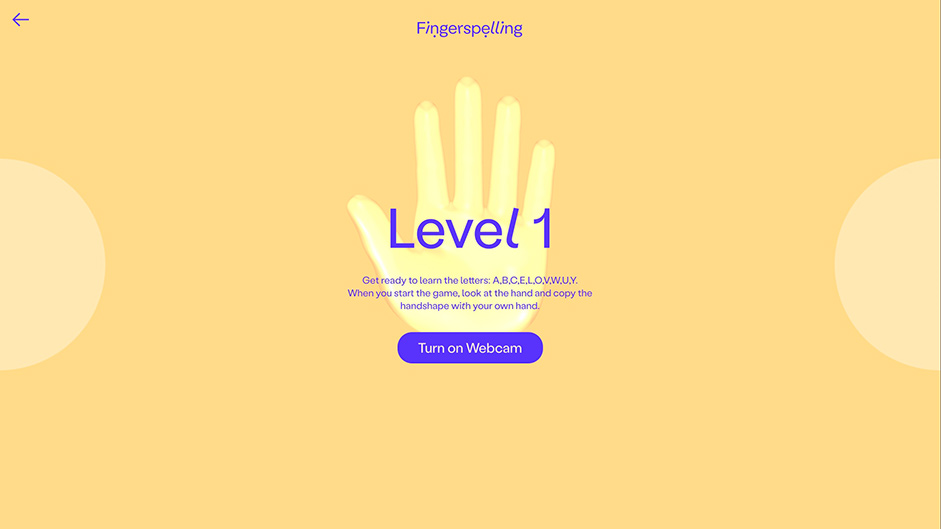

The fingerspelling game is a way to introduce the basics of ASL in a fun and playful way. Instead of having to read or watch videos about fingerspelling, this is an online teaching tool that guides you step by step in how to master fingerspelling - hands on! The game leverages advanced hand recognition technology, matched with machine learning, to give you real time feedback via the webcam for each sign and word you spell correctly. The game is designed for desktop, primarily to be used by parents for deaf children, but kids will also find it a fun way to practice and improve their fingerspelling technique.

How it works:

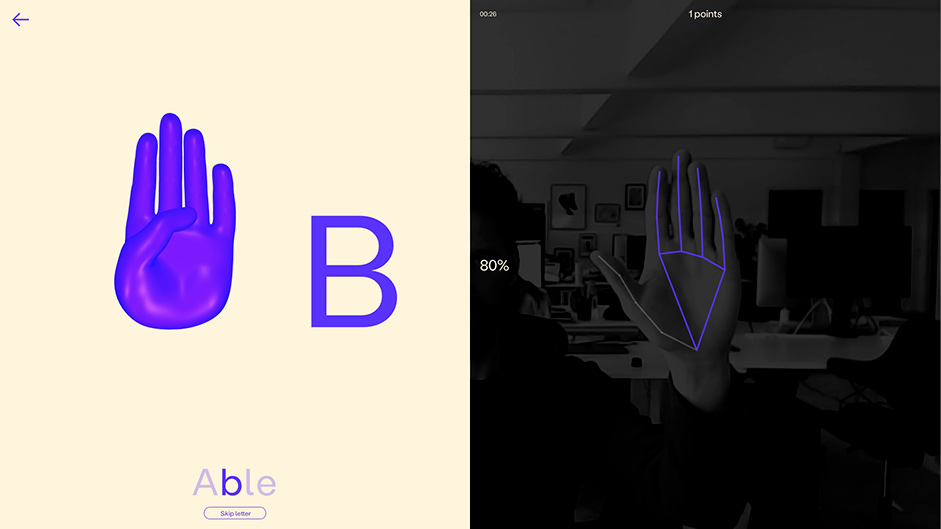

In the beginning, users see a series of words and the computer shows you a 3D model of how your hand should be positioned for each letter. When you sign the word, the camera tracks your hand movements and gives you feedback so you can make corrections as needed.

The computer shows you a 3D model of how your hand should be positioned for each letter.

As an example, you would be given the word ‘Able’ - and asked to spell it. You are then prompted to sign the first letter, A. On the left-hand side you have a 3D hand that shows you how your hand and fingers should be positioned. On the right-hand side you see a feed from your web camera, and you then hold up your hand and mimic what the 3D hand shows an A looks like. There are markers on the 3D hand that help you, in real-time, to see if your fingers are positioned correctly. And then you move on to the next letter.

Design

The goal was to communicate fingerspelling in the cleanest and most simple way possible. This means a less-is-more approach to design elements. The hand (which is made in 3D) is placed prominently in the centre of the site and paired with the playful typeface, Labil Grotesk.

Angles and placement of fingers are of key importance in communicating speech through sign language. This is why we were looking for a typeface that would reflect those similar subtle visual nuances. When we came across Labil Grotesk, we felt the angled glyphs had a dynamic movement that mirrors these hand gestures. The slightly off-kilter appearance also feels joyful and engaging, which supported our visual goals for the project as well.

In regards to color, we aimed to use a palette that was bold enough to draw people in and at the same time energize them to start learning to fingerspell. Capturing attention was key.

A lot of work was also put into the design of the 3D hand. We wanted it to feel friendly - but it also needed to be detailed enough for users to be able to easily see how the fingers are positioned and bent. So it is half cartoonish and half real.

The composition of the game screen - where you see the 3D hand to the left, the letter you need to spell in the middle and the hand to the right were also carefully tweaked and tested. Especially the placement of the letter - having that in the center and relatively big proved to make a huge difference on how the user ‘learned’. I think naturally, one would just see the 3D hand to the left and try to replicate that hand shape - and not really understand what letter they were signing. And without that understanding, you could easily go through a lot of words, and not really learn anything, but basically just copy the handshapes. So having the letter in large in between the 3D hand and the webcam-feed helped the user to better understand the relationship between the handshape and the letter.

Technologies

The main feature of the site is the hand tracking where we are using MediaPipe Hands. The library itself is very easy to get started with. And it is surprisingly performant and able to do detection, even when parts of the fingers are hiding behind the other fingers.

The most time-consuming task was to define when a Fingerspelling letter should be accepted or not. So we set up a rule-based system for each of the letters that looked at the rotation of the hand – whether it faced up, down, left or right. If the hand orientation was correct, we then looked at how each finger was positioned and how much the finger was bent. This was a manual process for each letter and it involved a lot of trial and error. To help make sure if we got the letters right, we had an ASL professor (Professor Kenneth De Haan from Gallaudet University) help us test the different letters. It was a very manual process, and it required a lot of testing on different users.

We are able to directly on top of the webcam feed, show the lines on the finger (drawn via. canvas)

By doing the mapping on each finger, we are able to directly on top of the webcam feed, to show the lines on the finger (drawn via. canvas) and also show if each individual finger is positioned correctly or not. So the lines light up blue when the finger is in a correct position - or stay gray if the finger is not in a correct position, making it possible to quickly see what fingers are positioned correctly and which are not.

To make sure that users would understand and have a frame of reference for how the hand and fingers should be positioned, we created a custom 3D hand that contained all the different letters. As the letters changed we then used ThreeJS AnimationMixer to animate between the different letters.

It’s fun to think about how this highly trained Machine Learning model - that researchers have spent countless hours on training - now does the opposite, it now trains us back.

Tech Stack

Hand Tracking: MediaPipe Hands

3D: ThreeJSs

Animations: GSAP

Hosting: Firebase

Company Info

Hello Monday is a creative studio that makes digital ideas, experiences, brands, and products. We’re called Hello Monday because we aim to make Mondays better. Better for the people and organizations we collaborate with, and better for the world.

More about us here